Methods

First approach: DeepFace

First, DeepFace was used for age regression. Since it is a regression solver, we have used the estimated age of the person to

classify subjects into buckets.

y_model_noEnforce = []

i=0

# iterate over all files in the fold

for index, row in metadataFilt.iterrows():

# construct the filename

filename = dbPath + row['user_id'] + '/landmark_aligned_face.' + \ str(row['face_id']) + '.' + row['original_image']

tmp = DeepFace.analyze(filename, actions=['age'], enforce_detection=False)

y_model_noEnforce.append([i, tmp['age']])

print("Process image (row %d): " % i + filename)

print("\t model: %d, expected: " % tmp['age'] + gindxs[y_real[i]])

i=i+1

Documentation

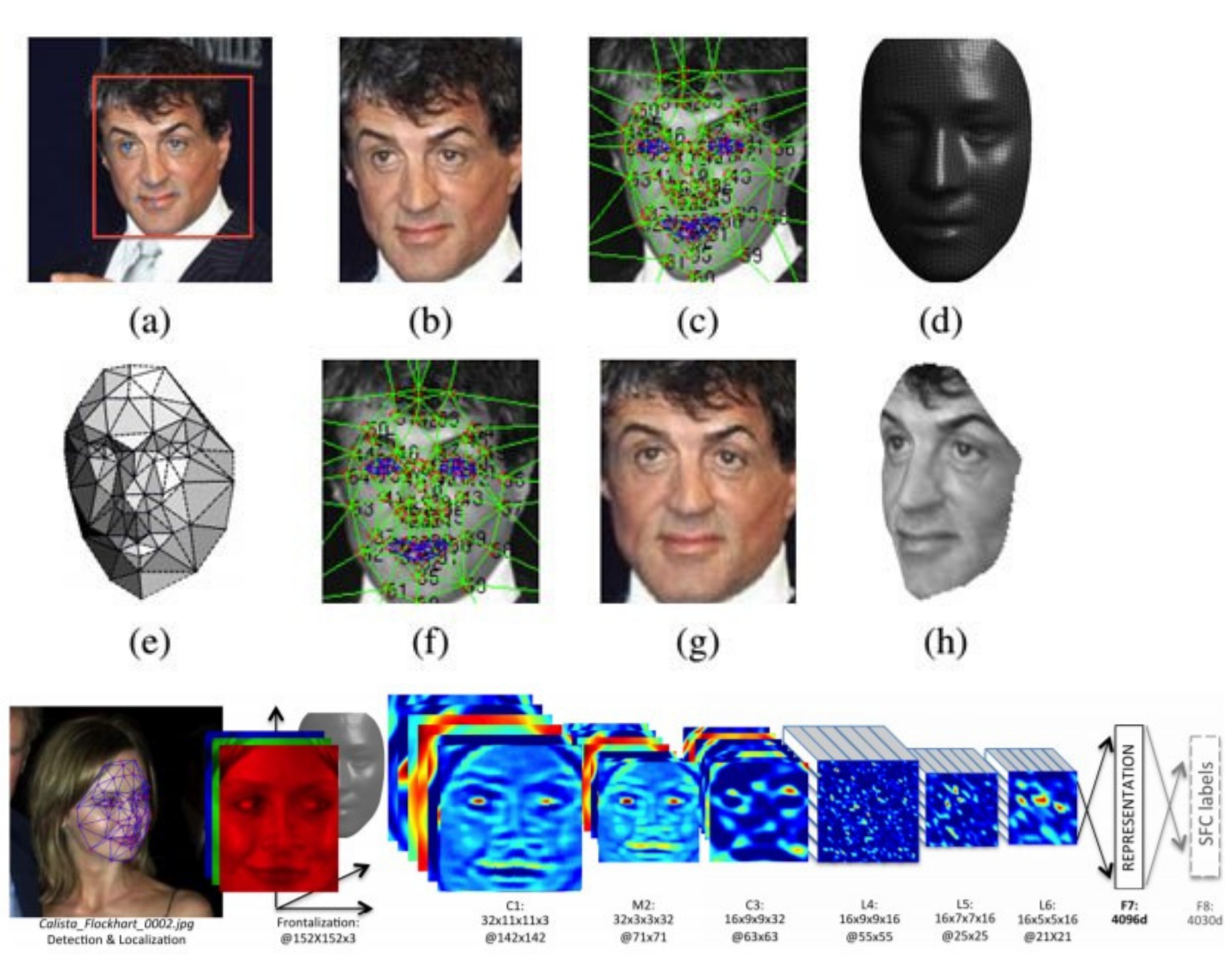

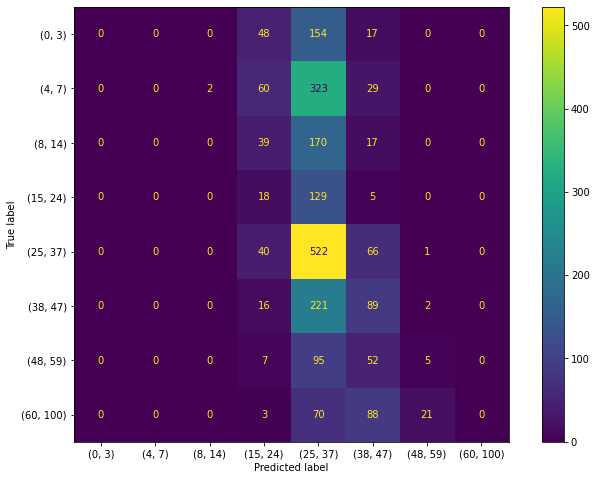

Second approach: OpenCV using a pretrained model

For the second approach, an OpenCV method dnn with Caffe model by Levi and Hassner

was used.

AGE_BUCKETS = ["(0, 2)", "(4, 6)", "(8, 12)",\ "(15, 20)", "(25, 32)", "(38, 43)", "(48, 53)", "(60, 100)"]

prototxtPath = os.path.sep.join(["/home/jost/dev/ssip2021/models", "deploy_age.prototxt"])

weightsPath = os.path.sep.join(["/home/jost/dev/ssip2021/models", "age_net.caffemodel"])

ageNet = cv2.dnn.readNet(prototxtPath, weightsPath)

y_model_second = []

y_model_second_conf = []

i=0

# iterate over all files in the fold

for index, row in metadataFilt.iterrows():

# construct the filename

filename = dbPath + row['user_id'] + '/landmark_aligned_face.' + \ str(row['face_id']) + '.' + row['original_image']

face = cv2.imread(filename)

faceBlob = cv2.dnn.blobFromImage(face,1.0, (227, 227),\ (78.4263377603, 87.7689143744, 114.895847746), swapRB=False)

# predict age

ageNet.setInput(faceBlob)

preds = ageNet.forward()

j = preds[0].argmax()

age = AGE_BUCKETS[j]

ageConfidence = preds[0][j]

y_model_second.append(age)

y_model_second_conf.append(ageConfidence)

# output data

print("Process image (row %d): " % i + filename)

#print("\t model: %s, expected: %s" % (age, gindxs[y_real[i]]))

print("\t model: %s, expected: %s" % (age, metadataFilt['age'][index]))

i=i+1

Levi, G., & Hassner, T. (2015). Age and gender classification using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 34-42)

Documentation

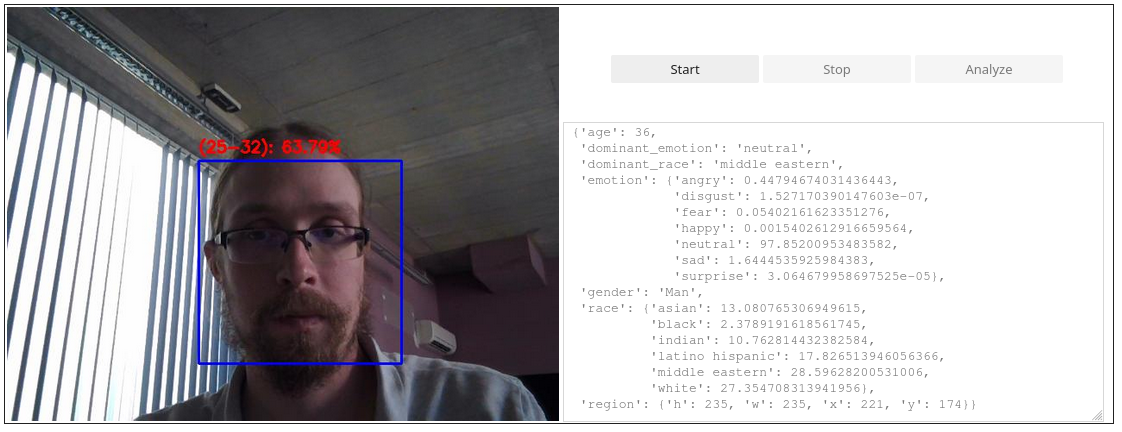

Demo

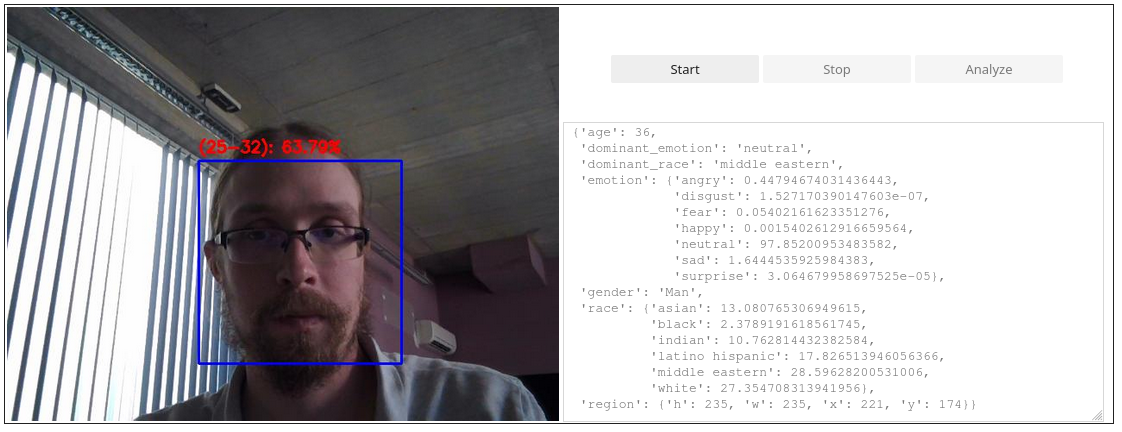

To showcase some of the existing pre-trained models, we have integrated DeepFace and an OpenCV compatible (Hassner and Levi, see methods section for more details) models into an OpenCV based live image acquisition GUI built using Jupyter. An example of OpenCV based age classification is shown as an overlay on the recorded image, while a more thorough analysis results of the DeepFace model are calculated on demand and shown in the output window on the right.

See the code on GitHub

See the code on GitHub

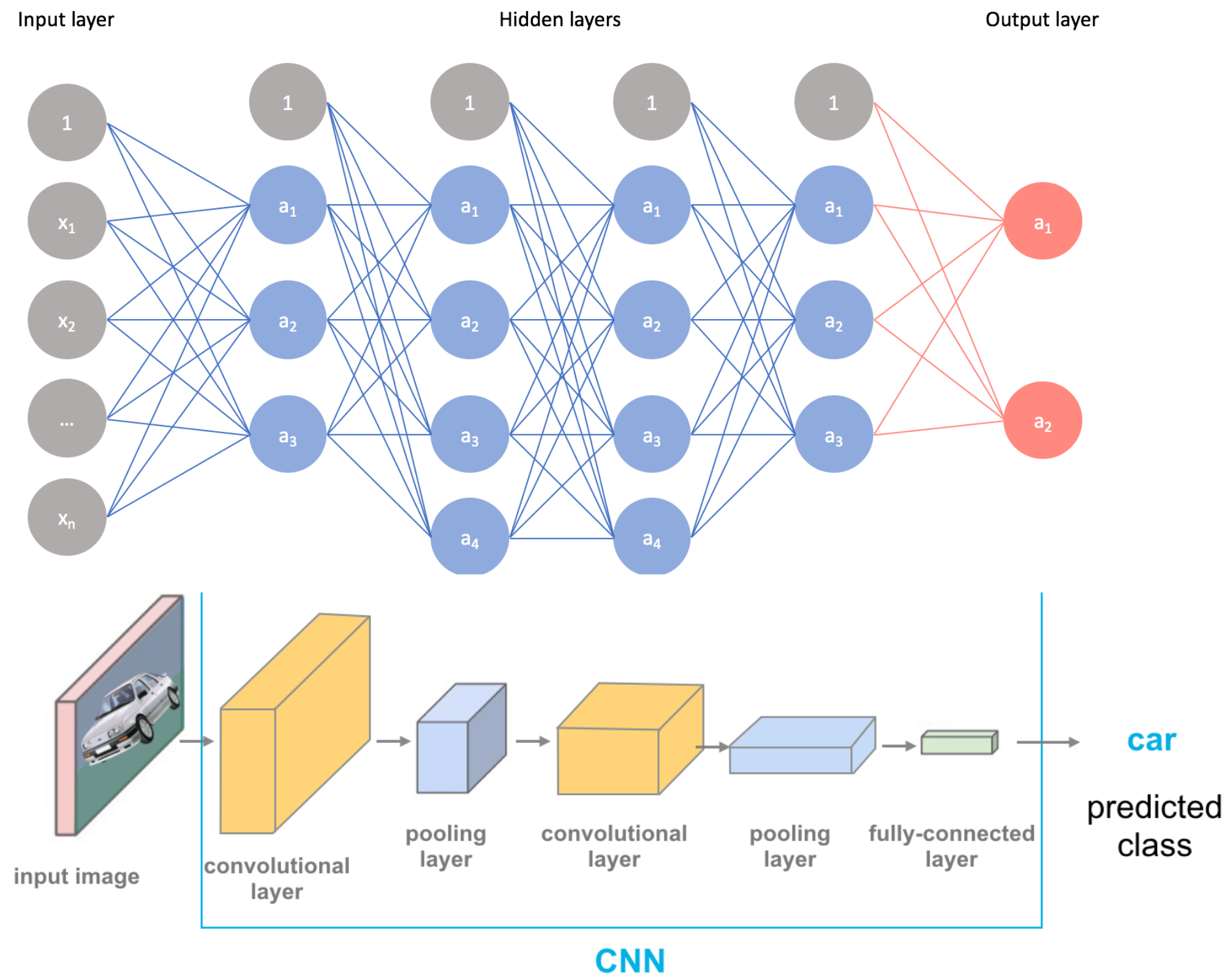

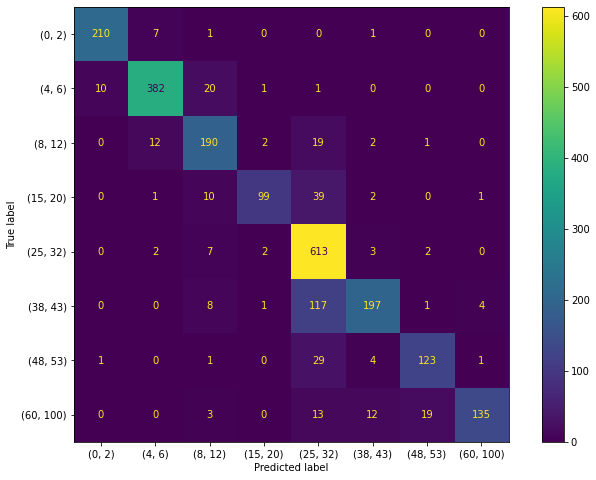

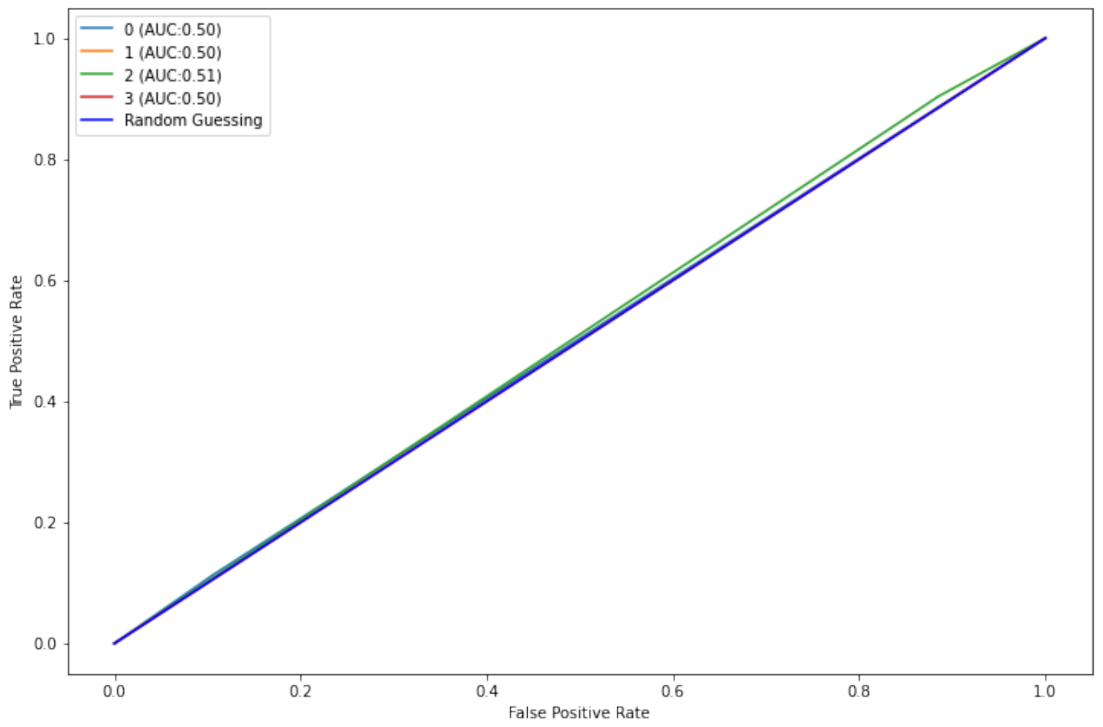

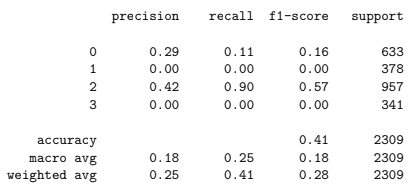

Third approach: Custom neural network, based on EfficientNet

For the third approch, a custom network was built on top of the EfficientNet network.

Before the efficientNet netwrok, we have added a batch normalizaton layer. After the EfficientNetB1 network, batch normalization, global

max pooling 2D and dropout layers are added before the last fully connected layer that transforms EfficientNet detected features to age groups.

Definition of the model is given below. The network initialization was performed in Keras.

# Define how the model will look like

base_efficientnet_model = EfficientNetB1(input_shape = (240, 240, 3), \ include_top = False, weights = 'imagenet')

age_model = Sequential()

age_model.add(BatchNormalization(input_shape = (240, 240, 3)))

age_model.add(base_efficientnet_model)

age_model.add(BatchNormalization())

age_model.add(GlobalMaxPooling2D())

age_model.add(Dropout(0.5))

age_model.add(Dense(4, activation = 'softmax'))

Documentation