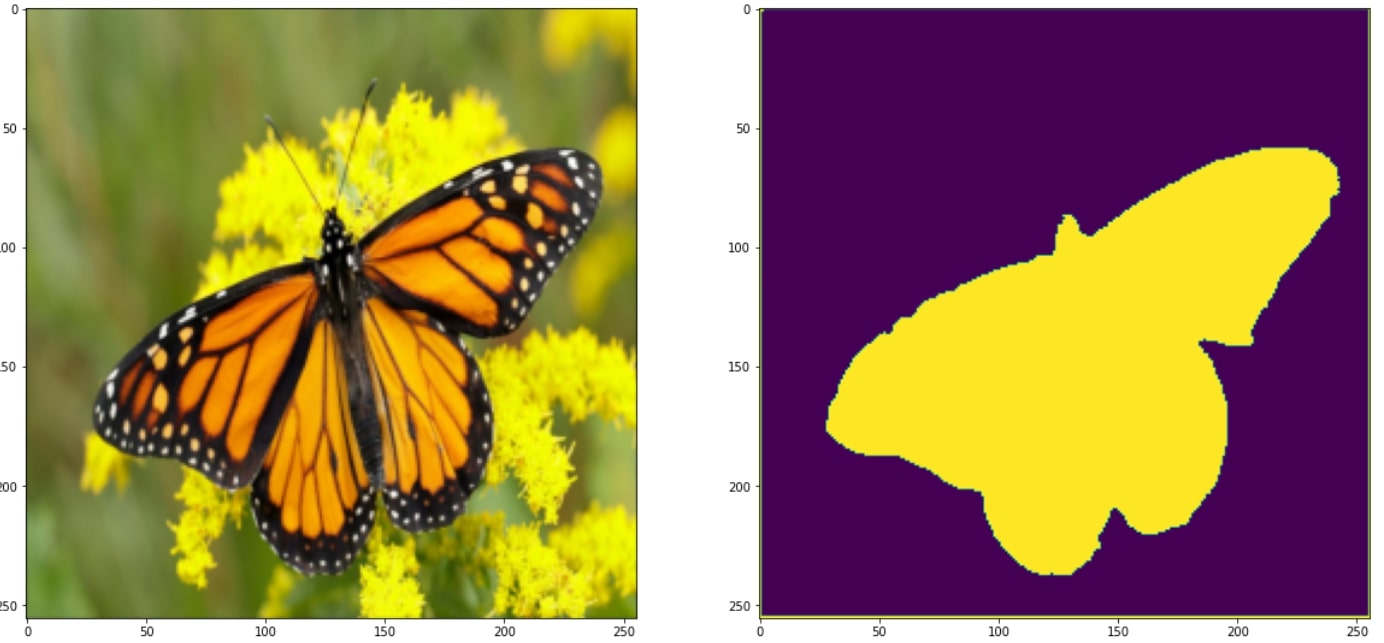

Semantic segmentation for butterflies using U-Net architecture - Luka Ivanić

Why segment?

Segmentation isn't an easy task, so how come it's worth-while to do it? Well, it is very logical to conclude that isolating the butterfly in an image would improve the classifier results and training speed. Without the background, the classifier get's a much easy job of figuring out it's paramaters.

What precision are we aiming for?

We were getting a precision of 98%, but that is hardly necessary. It doesn't take long for the classifier to learn to ignore a couple of mishaps in a segmented image. Any precision above 90% is plenty.

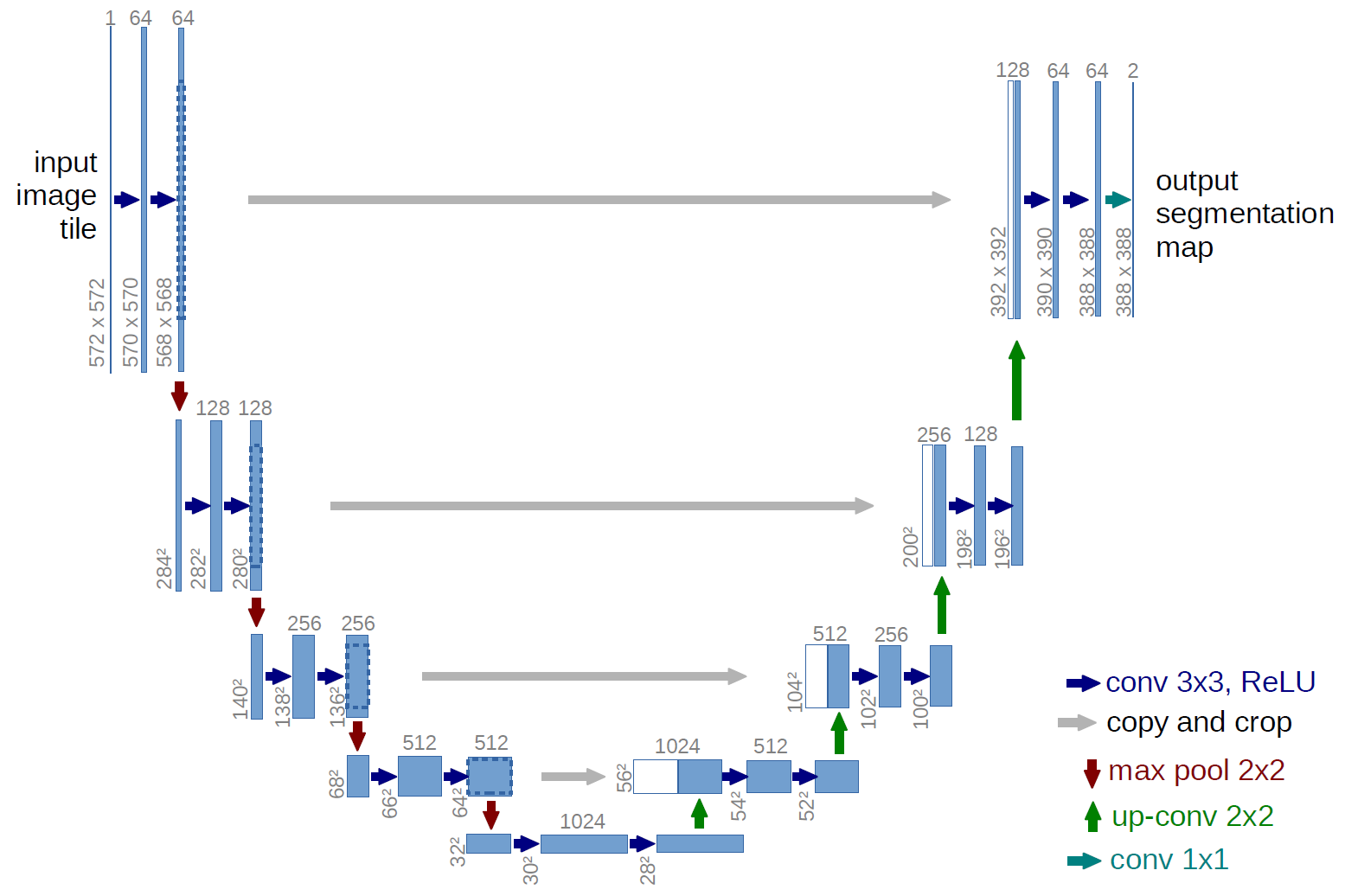

How is it done?

We followed the U-Net architecture, which is a standard for segmentation. It isn't hard to develop the architecture pattern in code, so we did it manually. The toughest part was configuring the paramaters such as image size.

Challenges

The biggest challenge was configuring the input data for training. Of course not all training data is in the same format, so you always have to do some transforming of the data beforehand. Google colab resources were a problem sometimes as well, since there were daily GPU limits.

Occasional mishaps

Sometimes the model doesn't do the best job, but it is still plenty good to be passed on to the classifier.

Different perspective

Here we can see the model performs well even when the butterfly wings are not perfectly presented.

Different angle

As we can see, a different angle is no problem for the model.