When registering, students will pick five projects from this list, in their order or preference. Their choice will be considered when assigning them to specific project teams. Countries (spoken language), universities of origin and students’ backgrounds will also be considered, trying to make the teams as heterogeneous as possible – to balance the teams and enable students to establish new contacts.

The teams will consist of three or four students. Each student will work in one team. Team assignments will be announced on the first day of the school.

The goal of team engagement in project work is to:

- encourage team members to establish new contacts with other members of the academic community,

- collaborate on project tasks,

- exchange knowledge and experience,

- choose team roles according to interests and experience

- share tasks so that the team is well balanced and achieves the best results.

This is a tentative list of projects.

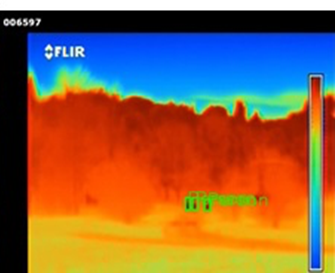

P1: Person detection in thermal images

Detection involves localization of the person (at bounding box level). Multiple persons may be present in the image and should all be detected. Evaluate detection performance in terms of average precision (AP).

Input: Thermal image (frame) from surveillance video recordings.

Output: Detection of the person in the image (bounding box with confidence score).

Dataset: http://ieee-dataport.org/open-access/thermal-image-dataset-person-detection-uniri-tid

Remarks: Detectors that have good results on the visual spectrum can successfully be used on tremal images. Domain adaptation and model re-training on the (part of) thermal dataset can improve detection results.

P2: Person detection in drone images

Detection involves localization of the person (at bounding box level). Multiple persons may be present in the image and should all be detected. Evaluate detection performance in terms of average precision (AP).

Input: Drone images (frame) of search and rescue operations.

Output: Detection of the person in the image (bounding box with confidence score).

Dataset: https://ieee-dataport.org/documents/search-and-rescue-image-dataset-person-detection-sard

P3: Counting objects – person detection

Counting persons on the image or video.

Input: Image or video streams of persons.

Ouput: Detection of pesons in the image (bounding box with confidence score) plus a number of persons in the input image.

Requirements: Detection involves localization of the objects (at bounding box level) and classification. Evaluate detection performance in terms of average precision (AP).

P4: Counting objects – windows detection

Counting windows.

Input: Image or video streams of the buildings.

Ouput: Detection of windows in the image (bounding box with confidence score) plus a number of windows in the input.

Requirements: Detection involves localization of the objects (at bounding box level) and classification. Evaluate detection performance in terms of average precision (AP).

P5: Licence plate detection and recognition

Licence plate detection and recognition of plate numbers and signs.

Input: A digital image or video streams of car images.

Output: Detection of licence plate in the image (bounding box) and recognition of numbers on plate.

Datasets: https://www.kaggle.com/andrewmvd/car-plate-detection

Requirements: Plate detection involves localization of the plate (at bounding box level) and recognition of plate numbers and signs (OCR).

P6: Counting Objects – counting cars

Count all cars in the image, give every car a unique id (number).

Input: A digital image or video streams of cars in a parking lot.

Output: Detection of cars in the image (bounding box with confidence score) plus a number of windows in the input.

Dataset: http://cnrpark.it/

Requirements: Detection involves localization of the objects (at bounding box level) and classification. Evaluate detection performance in terms of average precision (AP).

P7: Traffic signs detection and recognition

Traffic sign detection and recognition.

Input: A digital image or video streams of street images with traffic sign.

Output: Traffic signs detection in the image (bounding box) and recognition.

Datasets: https://benchmark.ini.rub.de/gtsrb_news.html

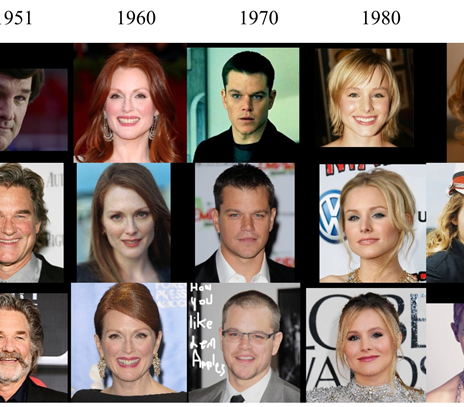

P8: Age recognition

Recognizing the age of person based on the facial image (the age can be recognized within several classes: teenager, young, middle age, old).

Input: A digital image of a face.

Output: Age of person in the image.

Datasets: https://www.face-rec.org/databases/

P9: Artistic style transfer

Generate new images by artistic style transfer to existing image of real life.

Input: Real-world image

Output: Images generated by artistic style transfer.

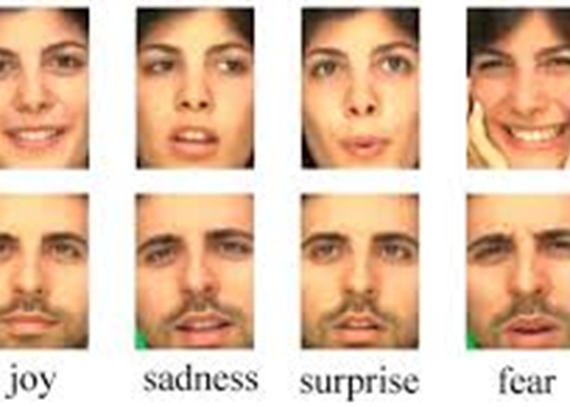

P10: Face expression recognition

Recognizing person facial expression within classes: angry, disgust, fear, happy, neutral, sad, surprise.

Input: A digital portrait.

Output: Facial expression mapped to one of expression classes: angry, disgust, fear, happy, neutral, sad, surprise.

Datasets: https://www.kaggle.com/jonathanoheix/face-expression-recognition-dataset

P11: Smile!

Design a software tool that takes a photo, only when everyone smiles in front of the camera.

Minimum requirement: The program reads an image with up to 5 people’s faces visible to the camera in front of a diverse background. The program detects faces and determines the number of smiling faces. If everyone smiles, give a special “message”.

Advanced solution: Control a webcam to take a real-life still images, if everyone smiles.

Building a database of required photos is part of the task.

P12: Butterfly recognition and retrieval

Build a butterfly recognition framework which returns a list of potential matches ranked according to the similarity to a query image.

The problem involves several subtasks: coarse localization of the butterfly, segmentation (hint: symmetry, color, shape might help segmentation), appearance representation and matching (hint: analyzing the dataset with respect to discriminative features might be necessary), evaluation, e.g. how recognition performance depends on the size of the training dataset, which species are similar to each other (confusion).

Dataset: http://www.comp.leeds.ac.uk/scs6jwks/dataset/leedsbutterfly/

Remarks: Additional butterfly data (beyond the provided link) can be found easily using search engines.

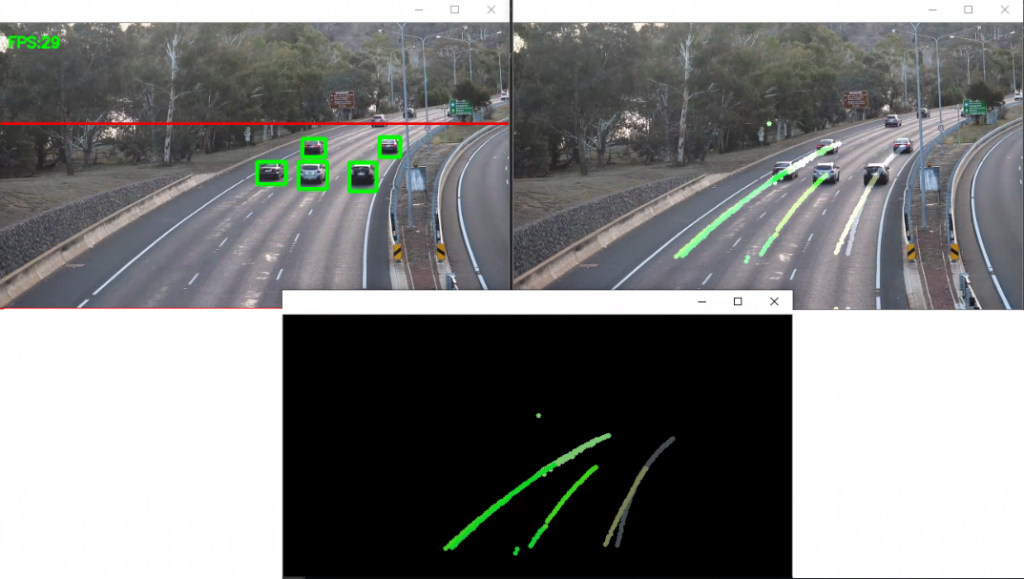

P13: Highway traffic monitoring

Analyse video surveillance of the highways. The vehicles should be separated and tracked while they are visible. The team should consider the shadows, the different time of day as well as the different weather conditions. The team should also implement tracking abnormal vehicle movements, giving the possibility to detect accidents.

Dataset: Input can be found on Youtube searching “highway traffic surveillance videos”.

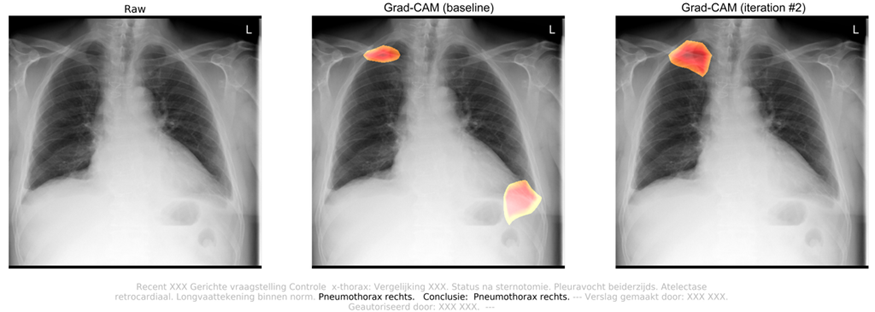

P14: Identify pneumothorax disease

Develop a model to classify (and if present, segment) pneumothorax from a set of chest radiographic images.

Description and data: https://www.kaggle.com/c/siim-acr-pneumothorax-segmentation/

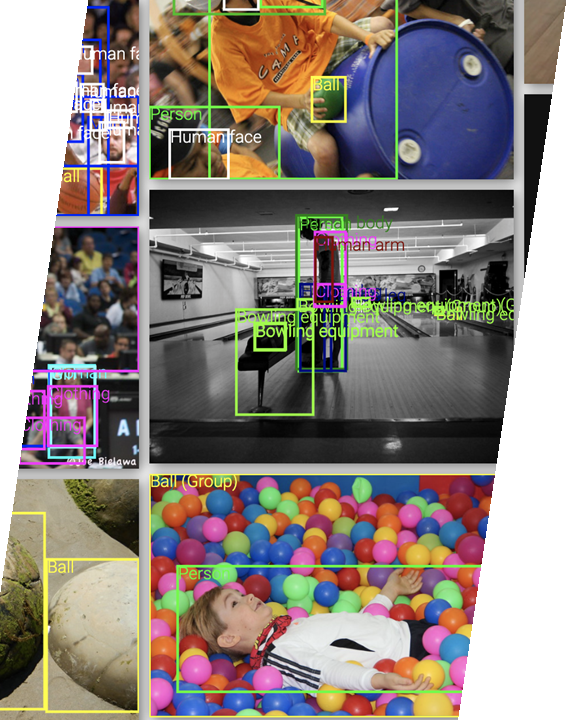

P15: Ball detection, ball segmentation

Ball detection (at bounding box level) or ball segmentation.

Input: Images of balls.

Output: Ball detection in image (bounding box with confidence score) or ball segments makings.

Dataset: https://storage.googleapis.com/openimages/web/factsfigures.html

Requirements: Multiple ball instances may be present and need to be detected in the image.

P16: Gesture recognition

Recognition of hand gestures (24 categories of hand gestures).

Input: Images of hands.

Output: Hands localisation (bounding box) and one of gesture class.

Dataset: https://www.kaggle.com/c/hgr/data

P17: Detection of roadside vegetation

Detecting vegetation in robotics is used for: navigating autonomous vehicles through on-road and off-road scenarios, detecting the weed nearby the railway, and maintaining roadside infrastruture.

Input: Still image or video stream.

Output: Segmented area.

Remarks: Visible part of the spectrum can be used. Using NIR images makes the classification problem easier because chlorophyll rich vegetation reflects NIR light.

P18: Binary tomography

Calculate projections of binary images in few directions (MATLAB, ImageJ, Python: Radon transform). Try to reconstruct the original image from the projections can be solved by optimization. Improve reconstruction quality by using prior knowledge: binary values, homogeneity and structural information (Discrete Tomography).

P19: Food classification

Build an automated vision-based food classification system. The system should recognize the content of a plate/bowl based on one (or few) input picture.

Datasets: Food-11 and Food-5K datasets (http://mmspg.epfl.ch/food-image-datasets); Food Dataset (http://iplab.dmi.unict.it/madima2015/)

Define your own categorization granularity (for example the 11 major food categories of the Food-11 dataset).

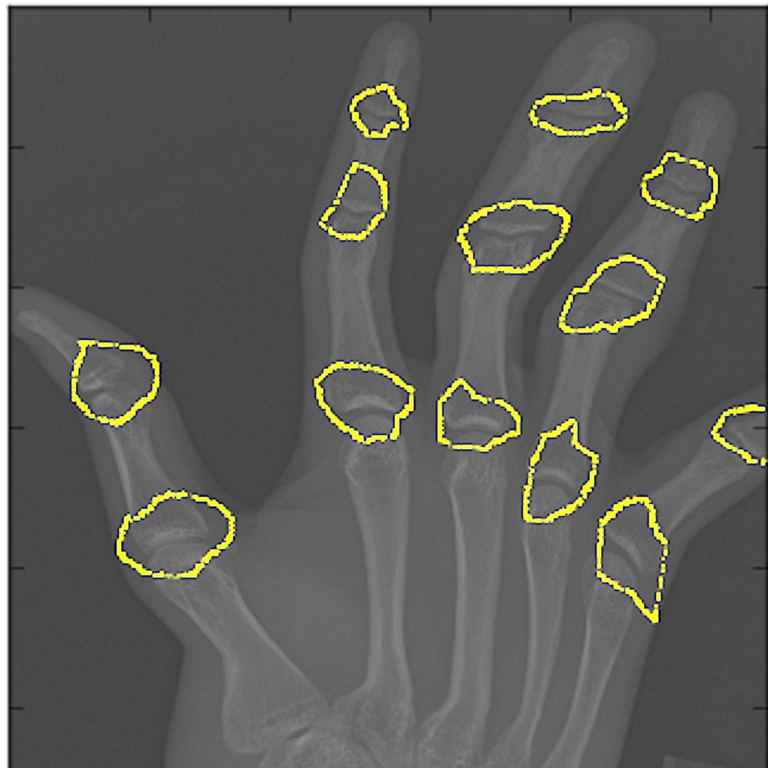

P20: Bone age estimation

Develop a method for accurately determining skeletal age in a curated data set of paediatric hand radiographs.

Input: Annotated X-ray dataset

Output: Age

Dataset: https://www.kaggle.com/kmader/rsna-bone-age